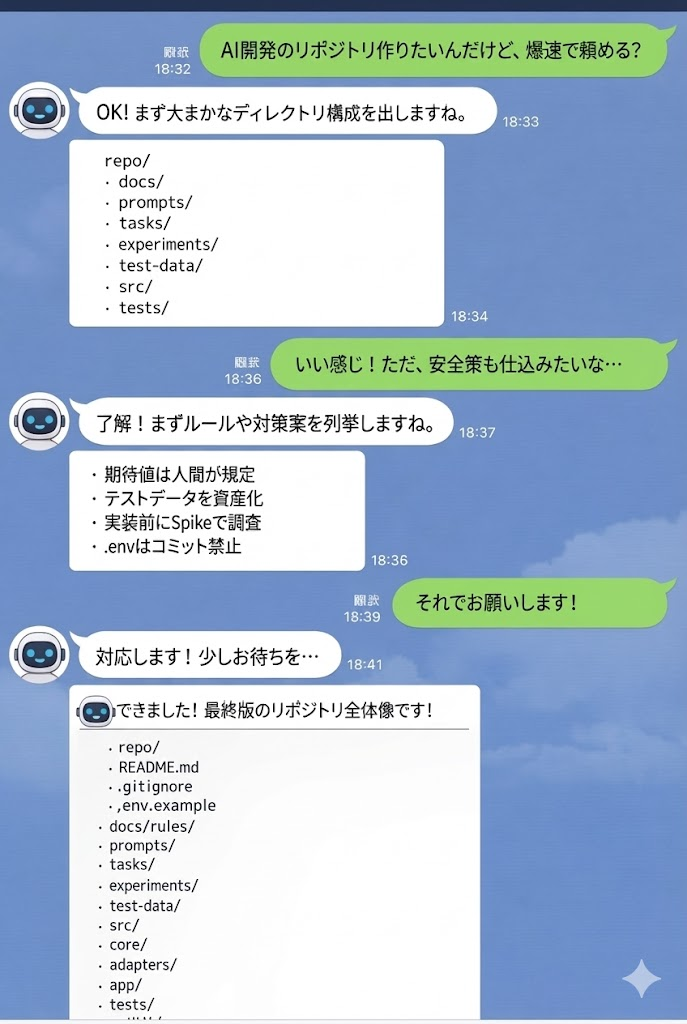

I asked AI about development practices with AI. For instance, there seem to be many attempts to facilitate onboarding by placing documents and workspaces like Cloude.md.

0. Positioning of This Guide

What you want to do is not “process management for large enterprises,” but rather:

- Improve and automate your own work (scripts, CLI, batch, ETL, notification bots, report generation…)

- Small-scale toC (smaller web tools, personal utilities…)

to quickly create with AI.

However, speeding up can lead to the following issues:

- AI implements things impulsively and cannot fix them later (no reproducibility, no reasons left)

- If AI creates tests, it may pass with bugs included (circular reference)

- Discarding investigation and verification code leads to repeating the same investigations (knowledge is not capitalized)

- Rules become bloated, overwhelming context, causing AI to miss crucial instructions

Therefore, this template is designed to “maintain speed while incorporating only the minimum safety devices.”

1. Assumptions (Conditions Expected by This Template)

1-1. Tool Assumptions (Tool Independence)

- Usable with Web UI / IDE extensions / CLI

- Does not assume a specific “automatically read file name” → Standardize operations where humans provide the “files to read” to AI

1-2. Git Assumptions (Minimum)

- Can perform

git add/commit - Can check differences with

git diff※ PR operations are optional (valid even for solo development locally)

1-3. Security Assumptions (Minimum)

- Do not commit

.env(only commit.env.example) - Do not include real data (like customer CSVs) in the repo (mask or dummy data)

- If using external tools (e.g., GitHub operations), handle authentication information via environment variables

2. Overview: What This Template Solves

2-1. Role Division (This is the Most Important)

In AI-driven development, your role leans more toward approver and expectation holder than “implementer.”

What the human (you) holds:

- Definition of Done (what constitutes completion)

- Expectations (input → expected output pairs)

- Permission for the scope of implementation (Approval)

What can be entrusted to AI:

- Task breakdown proposals (drafts)

- Implementation

- Test frameworks and comparison processes

- Proposals for experimental code creation for investigations (Spike)

- Summaries of changes and proposed commit messages

This delineation helps eliminate “circular references” and “runaway” issues in reviews.

2-2. Where to Place “Correctness”

As documentation increases in small-scale projects, it tends to become formalized. Therefore:

- Correctness of Specifications:

tests/andtest-data/(expectations are confirmed by humans) - Correctness of Usage and Operations:

README.md(Troubleshooting is also consolidated here) - Correctness of Guardrails for AI:

docs/rules/(entry point + division)

Documents like spec.md that require dual management will not be placed by principle.

3. Workflow (With Safety Devices, For Small Scale)

The following five phases will be fixed. Adhering to “the same order every time” stabilizes AI utilization.

- Plan & Approve

- AI generates

tasks/current.md(draft) - The human confirms Acceptance (expectations) and scope, marking it as

Approval: APPROVED - Implementation is prohibited until it becomes APPROVED

- Spike (Only When Necessary)

- If dependencies or behaviors are unclear, place reproducible code in

experiments/for observation - Record investigation results in

experiments/README.mdin one line (capitalization)

- Implement & Test

- Keep implementations small (aim for 3-5 changed files)

- Use test frameworks created by AI, with expectations confirmed by humans from

test-data/golden

- Review & Commit

- The human checks

git diff(stop unnecessary changes) - Capitalize history with Conventional Commits (prevent excessive “fix” commits)

- Retrospect & Update (Incorporate into Done Conditions)

Leave a “learning” somewhere (at least one)

- Add golden cases / add experimental code / update rules / append to README

Save

tasks/current.mdastasks/YYYYMMDD-*.md

4. How to Use Artifacts (Directories)

docs/rules/: Guardrails for AI (keep index short, details divided)prompts/: Reusable standard prompts (your “control panel”)tasks/: Task planning and history (capitalization of success patterns)experiments/: Verification and reproducible code (living documents to assist your future self)test-data/: Expectations confirmed by humans (core to break circular references)tests/: Automated verification (part of the truth)

5. Steps to Get Started (Shortest Introduction to This Template)

Place the “Completed Template” below as is

Provide to AI (tool-independent)

docs/rules/index.mdtasks/current.md

Have AI execute

prompts/launch.mdto updatetasks/current.mdYou input into

Acceptance→ enter expected outputs, and create expected intest-data/golden/Start implementation after marking

Approval: APPROVED

6. Full Completed Template (Copy and Paste as Is)

Below is the full content of the files to be placed directly under the repo.

6-1. Directory Structure (Completed Version)

repo/

README.md

.gitignore

.env.example

docs/

rules/

index.md

workflow.md

testing.md

security.md

coding.md

prompts/

launch.md

onboard.md

think.md

spike.md

implement.md

test.md

review.md

retrospect.md

tasks/

current.md

20251228-example-task.md

experiments/

README.md

20251228-example_spike.md

test-data/

README.md

golden/

README.md

case01_input.json

case01_expected.json

src/

core/

adapters/

app/

tests/

unit/

integration/6-2. .gitignore (Completed Version)

# Secrets

.env

# Logs & temp

*.log

*.tmp

*.bak

*.swp

# OS

.DS_Store

Thumbs.db

# Language/runtime (adjust as needed)

__pycache__/

.pytest_cache/

node_modules/

dist/

build/

coverage/6-3. .env.example (Completed Version)

# Copy to .env (DO NOT COMMIT .env)

# Example:

# API_BASE_URL=https://example.com

# API_TOKEN=replace_me6-4. README.md (Completed Version)

# AI-Driven Development (Prompt-Driven Development) Template

This template is for rapidly developing business improvements, automation, and small-scale toC in collaboration with AI, while ensuring "reproducibility," "capitalization," and "prevention of runaway" at minimal cost.

---

## Purpose

- Create quickly (utilizing AI)

- Make it robust (minimum safety devices)

- Ensure it can be fixed later (capitalize on prompts/investigations/expectations)

- Be tool-independent (usable with Web UI / IDE / CLI)

---

## Important Principles (Shortest)

- **Expectations (input → expected output) are confirmed by humans** (to prevent AI's circular references)

- **Commit prompts, task plans, and verification code** (essential for reproducibility)

- For unclear points, **conduct a Spike in `experiments/` before implementation**

- Rules should be **entry point + division** (keep `docs/rules/index.md` short)

- Done must include **asset updates** (adding golden cases/experiments/rules/README)

---

## Files to Always Provide to AI (Tool Independent)

Provide to AI at the start/resume:

1) docs/rules/index.md

2) tasks/current.md

*Note: Do not rely on automatic loading. Paste if using Web UI, or issue reference instructions if using IDE/CLI.*

---

## Workflow (With Safety Devices)

1) Plan & Approve

- AI generates/updates tasks/current.md (draft)

- Human confirms Acceptance (expectations) and marks Approval as APPROVED

- Implementation is prohibited until it becomes APPROVED

2) Spike (if necessary)

- Place reproducible/investigation code in experiments/

- Record results in experiments/README.md

3) Implement & Test

- Keep changes small (aim for 3-5 files)

- Use test frameworks by AI, with expectations confirmed by humans from test-data

4) Review & Commit

- Human checks git diff (stop unnecessary changes)

- Capitalize on meaningful history with Conventional Commits

5) Retrospect & Update (Done requirement)

- Implement at least one of:

- Add golden test data

- Add experiment (repro/investigation)

- Update docs/rules or README

- Archive tasks/current.md into tasks/YYYYMMDD-*.md6-5. docs/rules/index.md (Short Entry)

# AI Working Agreement (Index)

## Read order (start/resume)

1) tasks/current.md

2) docs/rules/workflow.md

3) docs/rules/testing.md

4) docs/rules/security.md (if touching auth/data/secrets)

5) docs/rules/coding.md

## Hard rules (must follow)

- Do NOT code until tasks/current.md shows `Approval: APPROVED`.

- Human owns expected outputs (golden data). Do not invent expectations.

- If behavior is unclear, create a Spike under experiments/ before implementing.

- Never commit secrets (.env is forbidden). Use .env.example only.

- Touch only files related to the current task.

- Use Conventional Commits. Every commit should be meaningful.6-6. docs/rules/workflow.md

# Workflow Rules (Small Project)

## Phases

1) Plan & Approve

- Generate/Update tasks/current.md

- Wait for human approval (APPROVED)

2) Spike (optional)

- Add experiments/ repro or investigation code

- Record result in experiments/README.md (1 line is enough)

3) Implement & Test

- Small changes per step (3-5 files, reasonable diff)

- Add/Update tests and/or test-data

4) Review & Commit

- Human checks git diff

- Conventional Commits

5) Retrospect & Update (Done requirement)

- Add at least one of:

- new golden test-data case, or

- new experiment/repro, or

- rule update in docs/rules/ or README

- Archive tasks/current.md into tasks/YYYYMMDD-*.md6-7. docs/rules/testing.md (Core to Prevent Circular References)

# Testing Rules

## Source of truth

- Truth = tests/ + test-data/

- Expected outputs (golden) are HUMAN-OWNED.

## Human-owned artifacts

- test-data/golden/*_expected.* (or snapshots)

- tasks/current.md Acceptance section

## AI responsibilities

- Create test harness and assertions that compare actual vs human-provided expected.

- Do NOT modify expected outputs unless explicitly instructed by human.

## When adding a feature/fix

- Add/Update at least one golden test-data case.

- Cover boundaries and error cases (empty, null, malformed, large input).

## Anti-cheat rules

- Avoid writing tests that only mirror current implementation.

- Prefer black-box tests using golden input/output.6-8. docs/rules/security.md

# Security & Privacy Rules (Small project baseline)

## Secrets

- Never commit .env. Only commit .env.example.

- No secrets in code, comments, tests, or logs.

- If a secret is found, STOP and warn the user.

## Data handling

- Do not add real customer/user data to repo.

- Use masked or synthetic datasets in test-data/ if needed.

## Token usage (optional tools)

- If using gh CLI, authentication is required:

- local: `gh auth login`

- CI/container: set `GH_TOKEN` and verify `gh auth status`

- Keep scopes minimal. Prefer read-only scopes unless needed.

## External tools / integrations

- Avoid introducing new external agents/tools without noting risks in tasks/current.md.6-9. docs/rules/coding.md

# Coding Rules

## Structure

- src/core: pure logic (no I/O)

- src/adapters: external I/O (API/DB/files)

- src/app: entrypoints (CLI/HTTP/job)

## Change scope

- Only edit files needed for current task.

- If broader refactor is needed, propose it in tasks/current.md and wait for approval.

## Style

- Keep functions small and single-responsibility.

- Prefer explicit error handling for external calls.

## Commit messages (Conventional Commits)

- feat: ...

- fix: ...

- refactor: ...

- test: ...

- docs: ...

- chore: ...6-10. prompts/launch.md

You are an assistant for creating development plans.

Please read the following and create or update tasks/current.md.

Read order:

1) docs/rules/index.md

2) README.md

3) existing tasks/current.md (if available)

Output requirements:

- tasks/current.md should be a "plan document for human approval."

- Place Goal / Non-goals / Acceptance (Human-owned) at the top.

- Divide the Plan into "granularity that AI can handle in one context":

- About 3-5 files to change

- If the diff is large, divide it

- If there are unclear points, include a Spike in the Plan, assuming it will be placed in experiments/ before proceeding.

- Approval must be set to `WAITING` (implementation is prohibited until the human changes it to APPROVED).

- Expected outputs (expected) are confirmed by humans, so do not create them arbitrarily; leave a space for humans to fill in.6-11. prompts/onboard.md

Resuming work. Please execute the following:

1) Read docs/rules/index.md and tasks/current.md, and summarize the current situation.

2) Next, select one checklist item from tasks/current.md (ensure Approval is APPROVED).

3) Briefly present "impact file candidates," "implementation policy," and "verification method" for that one item.

4) Do not write code yet (only plan here).6-12. prompts/think.md

Please summarize the implementation policy for the following subtask before implementation.

- Responsibility to change (somewhere in core/adapters/app)

- Exceptions and boundary conditions

- If dependencies are unclear, is a Spike necessary?

- What should be added in test data (golden) (expectations are to be written by humans).6-13. prompts/spike.md

Conducting a Spike (investigation).

- Organize investigation procedures and expected observation results, assuming you will create experiments/YYYYMMDD-<topic>.

- Write down what needs to be understood to proceed to the main implementation (Go/No-Go conditions).

- Also provide a draft for recording results in experiments/README.md.6-14. prompts/implement.md

Entering implementation. Rules:

- Only implement within the APPROVED range of tasks/current.md

- Keep changed files to a minimum (aim for 3-5 files)

- Do not change expected values (test-data's expected) until confirmed by humans

- Encapsulate external I/O in adapters

- After implementation, provide "how to verify (commands/observation points)."6-15. prompts/test.md

Preparing tests.

- Create test code on the tests/ side (comparison framework)

- Structure it to read from test-data/golden's input/expected

- Treat the contents of expected as placeholders since humans will prepare them

- Propose additional boundary/abnormal cases (but do not create expectations).6-16. prompts/review.md

Preparing for review.

- List the changed files.

- Self-check for any unexpected changes.

- Present a table showing compliance with specifications (Acceptance).

- Propose commit messages in Conventional Commits format.6-17. prompts/retrospect.md

Reflect and capitalize.

- What went well / what did not

- Rules to be followed in the next similar task (suggest where to add in docs/rules)

- Additional golden test-data cases or proposals for reproducible code in experiments

- Propose a rename plan to save tasks/current.md as tasks/YYYYMMDD-<short>.md6-18. tasks/current.md (Template)

# Goal

(Human writes) What this task aims to achieve in 1-3 lines.

# Non-goals

(Human writes) What will not be done this time. Prevents scope creep.

# Acceptance (Human-owned)

*Expectations are confirmed by humans. AI does not create them.*

- Case01: input = test-data/golden/case01_input.json -> expected = test-data/golden/case01_expected.json

- Case02: input = ... -> expected = ...

# Plan (AI drafts, Human approves)

- [ ] Spike: (if necessary) Add dependency/existing code behavior confirmation to experiments/

- [ ] Implement: Change src/... (target files:)

- [ ] Test: Add tests/... (golden comparison)

- [ ] Review: Create summary of changes and proposed commit messages

- [ ] Retrospect: Add golden cases or update rules or add experiments (Done condition)

# Notes

- Constraints, pitfalls, notes

# Approval

Status: WAITING

Approved by:

Approved at:6-19. experiments/README.md

# experiments/

Place reproducible code for confirming library behaviors, reproducing bugs, and checking boundary conditions.

This is a "living document." Do not discard it (it is subject to commit).

## Index

- 20251228-example_spike.md: Example6-20. experiments/20251228-example_spike.md

# Spike: Example

Purpose:

- Confirm the input format of Library X

Go/No-Go:

- No exceptions with Input A

- Output in this format with Input B (observation)

Results:

- (To be added after execution)6-21. test-data/README.md

# test-data/

Place "expected values" confirmed by humans.

To prevent AI's circular references, expected values are confirmed by human review.

- golden/: Black-box input/expected output pairs6-22. test-data/golden/README.md

# golden test-data

Naming:

- caseNN_input.*

- caseNN_expected.*

Operation:

- Expected values are confirmed by humans (AI does not create them arbitrarily)

- Add boundary/abnormal cases.6-23. Sample test-data/golden/case01_input.json

{

"example": "input"

}6-24. Sample test-data/golden/case01_expected.json

{

"example": "expected"

}